Thinking about previous experiences within data analysis, project management, and comparing what is available in my portfolio, I thought I’d take this opportunity to plan out another project to display. Specifically, this plan is about translating raw data into meaningful information for intended stakeholders to make decisions on. Having lived in a rural area for an extended period of time, I take the occasional trip into the “city” to get supplies to ease the cost of living in the area.

Where I currently live, there are only a handful of shops that supply goods for people to live off. Otherwise, there is a flourishing community market where people from the local, and further, area gather once a week to catch up over the past week’s events and share goods and services. That said, there is a main, independent grocery chain within the area that supplies goods to the community. Given we’re about 150 km from the closest city, they’re an independent store, and there is practically no competition in the market, this chain can set prices to easily clear their average variable cost and marginal cost curves. But, I digress.

This last visit to the city reminded me of opportunity costs that I had easily foregone whilst living in those situations. Things like transport; cars vs trains vs buses vs cabs vs Ubers vs scooters vs bikes. My mind went off onto Uber and, considering it had been a while since I’d really considered it, I wanted to develop a pipeline around available information that I could find about the rideshare. I trawled through the internet and I came across some interesting information about the business’ effects in NYC. This was my inspiration for this pipeline plan.

I pretended that I would be needing to present this information to a set of managers about certain metrics that they would be interested in. However, I wanted to make sure that I would be able to upload new data to this pipeline easily, and that it would be easily transferred to the end of the process. To do this though, I had to reimagine previous projects that I had completed and interpret it for a new (and…let’s be honest…free) way of doing it. I was also interested in trying out some of Google’s Cloud Platform services, so I had a dive into how I would integrate them.

To complete this task, it seems that I am going to need to use various tools and technologies. To analyse this Uber data and present the pipeline to users, here are the following tools and technologies that I’m planning on using: Google Cloud Platform Storage, Python, Compute Instance, Mage Data Pipeline Tool, BigQuery, and Looker Studio. This is how I am planning on using this combination of tools and technologies:

Google Cloud Storage: an online file storage service provided by Google as part of its cloud computing platform. It allows you to store and retrieve your data in the cloud, making it accessible from anywhere with an internet connection.

Google Compute Engine: a cloud computing service that provides virtual machines for running applications and services. it allows you to easily create, configure, and manage virtual machines with various operating systems and hardware configurations.

BigQuery: a cloud-based data warehouse provided by Google Cloud Platform that allows you to store, analyse, and query large datasets using SQL-like syntax. It is a serverless, highly scalable, and cost-effective solution that can process and analyse terabytes to petabytes of data in real-time.

Looker Studio: a web-based data visualisation and reporting tool that allows you to create interactive dashboards and reports from a variety of data sources, including Google Analytics, Google Sheets, and BigQuery. It enables you to turn your data into formative and engaging visualisations, which can be easily shared and collaborated on with others.

Mage: an open-source data pipeline tool, used for transforming and integrating data.

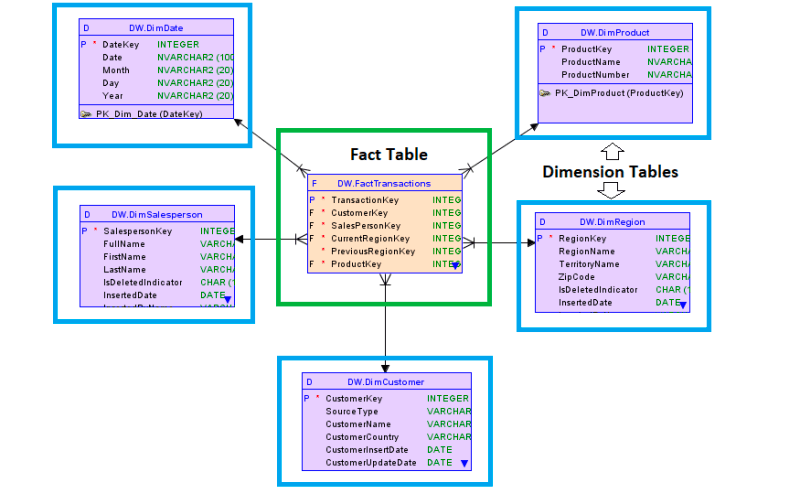

Given the data that will be used for this pipeline analysis, it is going to require a data warehouse approach. This means that I will need to create a Fact Table and some connecting Dimensions Tables. Similar to a transactional database, this warehouse database requires connections between its dimension tables, when reporting through the fact table. Since I have an understanding of joins using SQL, I can create these joins for analysis. Now, to put it all together. The post next week will be the outcome of putting this plan together.

Source: https://www.analyticsvidhya.com/blog/2023/08/difference-between-fact-table-and-dimension-table/

2 responses to “18 – Data Analysis Pipeline Plan”

[…] streams and geo-spatial analysis for location-based services. What was helpful in this learning was mapping out my proposed system and integrating each component within that […]

[…] summary from last week’s post is that I was preparing to build a Data Analysis Pipeline. This was the macro plan that the project […]